Event-driven microservices have gained immense popularity in modern software development due to their scalability, flexibility, and robustness. Leveraging technologies like Spring Boot and Kafka, developers can build highly responsive and loosely coupled systems. In this article, we will explore the fundamentals of event-driven microservices, using Spring Boot and Kafka, and finally, create a Java program that demonstrates the integration of these technologies using a real-world example.But, before we dive into the code. It’s very important to have a thorough understanding of the key terms. Let go through it one by one.

What is Event-Driven Microservices?

Event-driven microservices architecture is a design pattern that allows communication between microservices through asynchronous events. Instead of traditional synchronous communication, where microservices call each other directly, event-driven architecture relies on events being produced and consumed.

An event represents an occurrence of significance in the system, and multiple microservices can subscribe to these events to react accordingly.

What is Spring Boot?

Spring Boot is a popular framework in the Java ecosystem that simplifies the development of stand-alone, production-ready Spring-based applications. It eliminates boilerplate configuration and allows developers to focus on writing business logic.What is Apache Kafka?

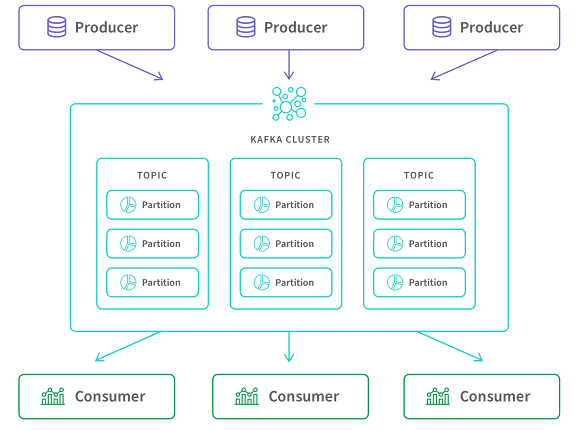

Apache Kafka is a distributed event streaming platform designed to handle real-time data feeds. It acts as a broker between event producers and consumers, enabling high-throughput, fault-tolerant, and scalable event processing. Kafka follows the publisher-subscriber model, where events are categorized into topics, and consumers subscribe to these topics to receive event data.

How to Create Event-Driven Microservices with Spring Boot and Kafka

It's time to dive into the real development of event driven microservice using Spring Boot and Kafka. Following are the major step we need to build the application.

Prerequisites

Before we dive into building event-driven microservices, ensure you have the following prerequisites:

• JDK (Java Development Kit) installed on your system.

• Apache Kafka and ZooKeeper installed and running. You can download Kafka from the official website and follow the documentation for installation and setup.

• A Java IDE (Integrated Development Environment) such as Eclipse, IntelliJ, or Visual Studio Code.

Setting Up the Project

Create a new Spring Boot project using your preferred IDE or Spring Initializr (https://start.spring.io/). Select the following dependencies:

• Spring Web

• Spring for Apache Kafka

• After generating the project, import it into your IDE.

Setting up Kafka

Before diving into coding, we need to set up Kafka. Download Kafka and follow the official documentation for installation and configuration. Once Kafka is up and running, create a topic named "user-events" as we will use it in our example.

Open the application.properties file and add the following Kafka configurations:

# Kafka Configuration

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.group-id=user-service-consumer-group

spring.kafka.producer.key-serializer

=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer

=org.springframework.kafka.support.serializer.JsonSerializer

spring.kafka.consumer.key-deserializer

=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer

=org.springframework.kafka.support.serializer.JsonDeserializer

spring.kafka.consumer.auto-offset-reset=earliestCreating the User Service

Let's consider a real-world example of a user service, responsible for managing user-related data. Our service will expose endpoints for user creation, update, and deletion. When a user is created or updated, we want to produce an event to notify other microservices (e.g., a notification service or an analytics service) about the change.

User class representing a real-world entity

public class User {

private Long id;

private String name;

// Other attributes, constructors, getters, and setters

}

User Controller

@RestController public class UserController { private final KafkaTemplate<String, User> kafkaTemplate; private static final String TOPIC = "user-events"; public UserController(KafkaTemplate<String, User> kafkaTemplate) { this.kafkaTemplate = kafkaTemplate; } @PostMapping("/users") public ResponseEntity<String> createUser(@RequestBody User user) { // Save user to the database // ... // Produce an event to Kafka topic kafkaTemplate.send(TOPIC, user); return ResponseEntity.ok("User created successfully!"); } @PutMapping("/users/{id}") public ResponseEntity<String> updateUser(@PathVariable Long id, @RequestBody User user) { // Update user in the database // ... // Produce an event to Kafka topic kafkaTemplate.send(TOPIC, user); return ResponseEntity.ok("User updated successfully!"); } @DeleteMapping("/users/{id}") public ResponseEntity<String> deleteUser(@PathVariable Long id) { // Delete user from the database // ... // Produce an event to Kafka topic kafkaTemplate.send(TOPIC, new User(id, "")); return ResponseEntity.ok("User deleted successfully!"); } }

In the above example, the UserController is responsible for creating, updating, and deleting users. After the database operations, it uses the KafkaTemplate to publish User events to the "user-events" topic.

Consuming Events

Now, let's create another microservice responsible for consuming and processing the User events.

@Component public class UserEventsConsumer { @KafkaListener(topics = "user-events", groupId = "user-service") public void consumeUserEvent(User user) { // Perform necessary processing on the received user event // E.g., sending a notification or updating user analytics // Simulating the processing of the event System.out.println("Received User Event: " + user.getName()); } }

The UserEventsConsumer uses the @KafkaListener annotation to listen to the "user-events" topic. Whenever a new User event is published, this consumer will process the event accordingly.

Running the Application

To run the example application, compile both microservices, and then start them. Verify that Kafka is running and the "user-events" topic is created.

• Start ZooKeeper and Kafka by running the respective commands in separate terminal windows or using the provided scripts.

• Create a topic named "user-events" to which the User service will publish events:

bin/kafka-topics.sh --create --topic user-events --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1

• Run the Spring Boot application for both the User service and the UserEventsConsumer service.

• Use a tool like Postman or cURL to interact with the User service. Send POST and PUT requests to create and update users. For example:

curl -X POST -H "Content-Type: application/json" -d '{"id": 1, "name": "John Doe"}' http://localhost:8080/users

# Update an existing user

curl -X PUT -H "Content-Type: application/json" -d '{"id": 1, "name": "Jane Doe"}' http://localhost:8080/users/1

Observe the output in the console where the UserEventsConsumer is running. It should show the received User events:

Received User Event: John Doe

Received User Event: Jane Doe

Conclusion:

Congratulations! You have successfully built event-driven microservices using Spring Boot and Kafka. By leveraging Kafka as the event broker, our microservices can communicate asynchronously and achieve loose coupling, enabling a highly scalable and responsive architecture.

Event-driven microservices play a pivotal role in modern application development, and with the integration of Spring Boot and Kafka, developers can create robust and efficient distributed systems to meet the demands of today's software landscape.

In this article, we explored the concept of event-driven microservices and learned how to implement them using Spring Boot and Kafka. We set up Kafka, created a User service that produces events, and a consumer service that consumes and processes these events.

Event-driven architecture allows us to build highly scalable and responsive systems by enabling loose coupling between microservices. By utilizing the power of Spring Boot and Kafka, developers can architect robust and efficient event-driven microservices.

No comments:

Post a Comment

Feel free to comment, ask questions if you have any doubt.